A Truly Responsive WebXR Experiment: A-Painter XR

(originally published on the Mozilla MR Blog, March 2018. Just in case Mozilla shuts down the blog after shutting down the team, I'm preserving the posts I wrote for the blog.)

In our posts announcing our Mixed Reality program last year, we talked about some of the reasons we were excited to expand WebVR to include AR technology. In the post about our experimental WebXR Polyfill and WebXR Viewer, we mentioned that the WebVR Community Group has shifted to become the Immersive Web Community Group and the WebVR API proposal is becoming the WebXR Device API proposal. As the community works through the details of these changes, this is a great time to step back and think about the requirements and implications of mixing AR and VR in one API.

In this post, I want to illustrate one of the key opportunities enabled by integrating AR and VR in the same API: the ability to build responsive applications that work across the full range of Mixed Reality devices.

Some recent web articles have explored the idea of building responsive AR or responsive VR web apps, such as web pages that support a range of VR devices (from 2D to immersive) or the challenges of creating web pages that support both 2D and AR-capable browsers. The approaches in these articles have focused on creating a 3D user-interface that targets either AR or VR, and falls back to progressively simpler UIs on less capable platforms.

In contrast, WebXR gives us the opportunity to have a single web app respond to the full range of MR platforms (AR and VR, immersive and flat-screen). This will require developers to consider targeting a range of both AR and VR devices with different sorts of interfaces, and falling back to lesser capabilities for both, in a single web app.

Over the past few months, we have been experimenting with this idea by extending the WebVR paint program A-Painter to support handheld AR when loaded on an appropriate web browser, such as our WebXR viewer, but also Google’s WebARonARCore and WebARonARKit browsers. We will dig deeper into this idea of building apps that adapt to the full diversity of WebXR platforms, beyond just these two, in a future blog post.

An Adaptive UI: A-Painter XR

Let’s start with a video of some samples we created for the WebXR Viewer, our iOS app.

This video ends with a clip of the WebXR version of A-Painter. Rather than simply port A-Painter to support handheld AR, we extended it so that both the VR and handheld AR UIs are integrated in the same app, with the appropriate UI selected based on the capabilities of the user’s device. This project was undertaken to explore the idea of creating an “AR Graffiti” experience where users could paint in AR using the WebXR Viewer, and required us to create an interface designed for the 2D touch screens on these phones. The result is shown in this video.

This version of A-Painter XR currently resides in the “xr” branch of the A-Painter github repository and is hosted at https://apainter.webxrexperiments.com. (There is a direct link to the page, along with links loading pre-created content, on the startup page for the WebXR Viewer.) I’d encourage you to give it a try. The same URL works in on all the platforms highlighted in the video.

There were two major steps to making A-Painter XR work in AR. First, the underlying technology needed to be ported from WebVR to our WebXR polyfill. We updated three.js and aframe.js to work with WebXR instead of only supporting WebVR. This was necessary because A-Painter is built on AFrame, which in turn is built on three.js. (You can use these libraries yourself to explore using WebXR with your own apps, until the WebXR Device API is finalized and WebXR support is added to the official versions of each of them.)

The second step was creating an appropriate user interface. A-Painter was designed for fully immersive WebVR, on head-worn displays like the HTC Vive, and uses 6DOF tracked controllers for painting and all UI actions, as shown in this image of a user painting with Windows MR controllers. The user can paint with either controller, and all menus are attached to the controllers.

But interacting with a touch-based handheld display is quite different then interacting in fully immersive VR. While the WebXR Viewer supports 6DOF movement (courtesy of ARKit), there are no controllers (or reliable hand or finger tracking) available yet on these platforms. Even if tracked controllers were available, most users would not have them, so we focused on creating a 2D touch UI for painting.

UI Walkthrough for Touchscreen AR Painting

The first change to the user experience is not shown in the video, but is required for any web app that wants to bridge the gap between AR and VR: the XR application needs to start with the user or system defining a place in the world (an “anchor”) for the content.

When a user installs and sets up a VR platform, like an HTC Vive or a Windows MR headset, they define a fixed area in the room in which all VR experiences happen. When A-Painter is run on a VR platform, the center of that fixed area of the room is automatically used as the starting point, or anchor, for the painting.

AR platforms like ARKit and ARCore do not define a fixed area for the user to work in, nor do they provide any well-defined location that could be used automatically by the system as the anchor for content. That is why most current AR experiences on these platforms start by having the user pick a place in the world, often on a surface detected by the system, as an anchor. The AR interface for A-Painter XR starts this way.

After selecting an anchor for the painting, the user is presented with an interface to paint in the world. The interface is analogous to the VR interface, but made up of 2D buttons arranged around the edges of screen, rather than widgets attached to one of the VR controllers.

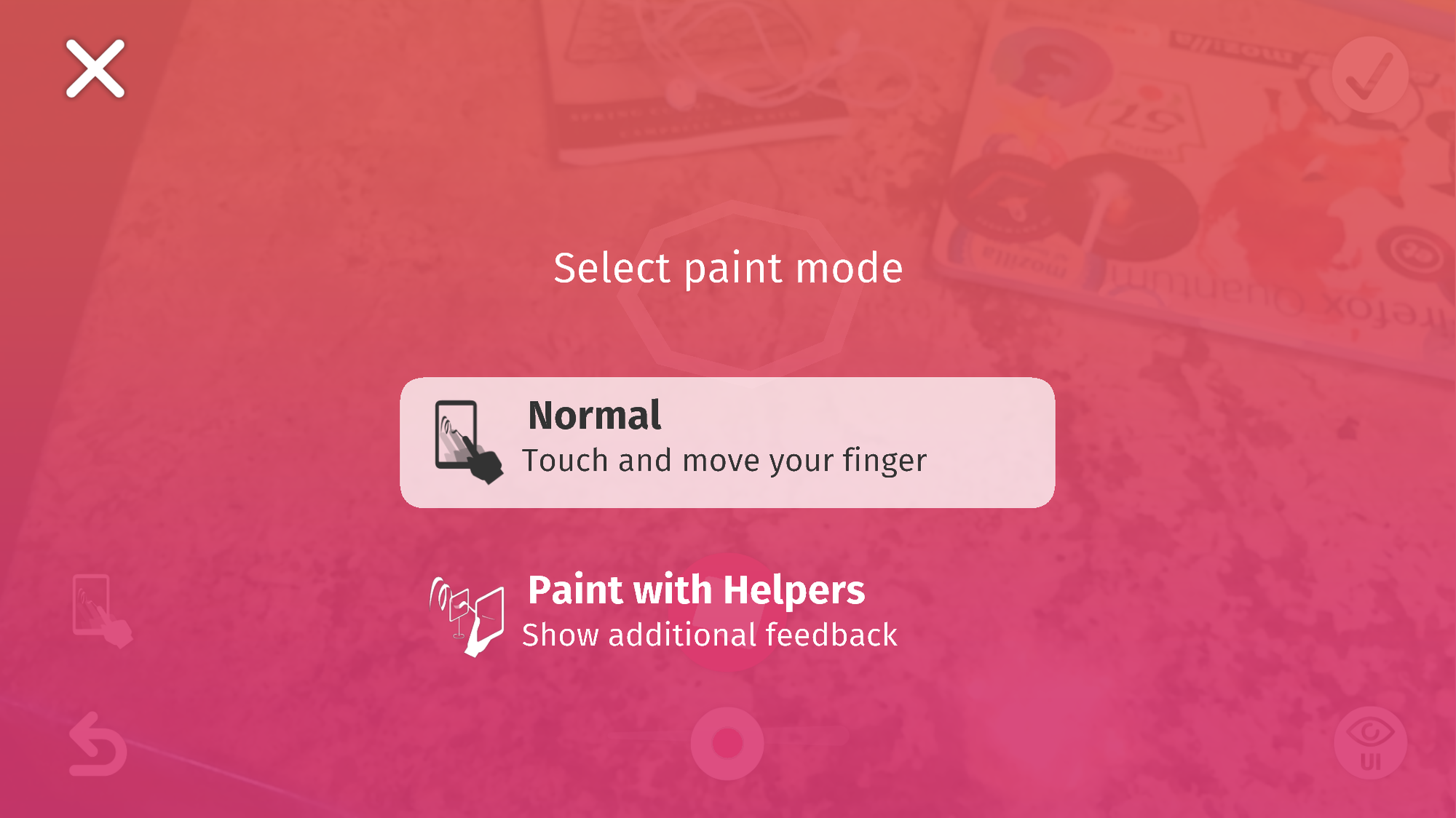

Paint mode (see below) and undo/redo are in the lower left, a save button is in the upper right, and a slider to change the brush size is in the center bottom under a button to change the brush selection. To keep the screen uncluttered, the brush selection UI is normally hidden, being brought up on request.

Much of the code and visual elements for this interface are shared with the VR version (e.g., icons and palettes), and the application state is similar. But the user experience is tuned for the two different platforms.

Digging Deeper into Touch Screen Painting

While the UI described above, and shown in the video, works reasonably well, there is a subtle problem that may not be obvious until you try to create a 3D painting yourself. In immersive VR, the graphics are drawn from the viewpoint of your eyes (or as close to them as the system can get) and you paint with the VR controller. The controller is in your hand in 3D space, and defines a single 3D location, so there is no ambiguity as to where the strokes should go: they are placed where the tip of the controller was when you pressed the button. Painting in immersive AR while wearing a see-through head-worn display would be similar.

In handheld AR, you interact with a view that seems to be a transparent view of the physical world. But while so-called “video-mixed AR” gives the illusion that the screen is transparent, with graphics overlaid on it, that illusion is slightly misleading. The video being shown on the display is captured from a camera on the back of the phone or tablet, so the viewpoint of the scene is a place on the back of the camera, not the user’s eyes (as it is with head worn displays). This is illustrated in this image.

The camera on my iPhone7 Plus is in the upper right of the phone in the image; the red lines show the field of view of the camera. Notice, for example, that the front corner of the book appears near the center of the phone screen, but is actually near the left of the phone in this image; similarly, the phone appears to be looking along the left edge of my laptop, but the view from the camera is showing a much more extreme view of the side of the laptop.

In most of the AR demos coming out for ARKit and ARCore, this doesn’t appear to matter (and, even if it creates problems for the user, these problems aren’t obvious in the videos posted to youtube and twitter!). But it matters here because when a user draws on the screen, it isn’t at all clear where they expect the stroke they are drawing to actually end up.

Perhaps they imagine they are drawing on the screen itself? I might hold the phone and draw on it, expecting that the phone is like a piece of glass, and my drawing will be in 3D space exactly where the screen is. In some cases, this might be exactly what the user wants. But that is difficult to implement because the view I’m seeing is actually video images of the world behind and slightly to the left of the phone (the area between the red lines in the image image above): if we put the strokes where the screen is, they wouldn’t be visible until the user stepped backwards away from where they drew them, and they wouldn’t be where the video was showing them to be when they drew.

Perhaps they imagine they are drawing on the physical world they see on the screen? But, if so, where? In the image above, when I touch the screen where the yellow/black dot is, the dot actually corresponds to a line shooting out of the camera in the direction shown. There are an infinite number of points on this line that someone might reasonably expect the paint stroke to appear on. I might intend for the paint to be placed in front of the corner of the book (point A), on the corner of the book (point B), or somewhere behind the book (point C). There’s no way we can know what the user wants in this case.

In our interface, we opted to place all points at a fixed distance of ½ meter behind the phone. While not the right choice for all situations, this distance is at least predictable. However, it is hard for users to visualize where a stroke might be placed before they place it. To help, we experimented with an alternative painting mode with more visual feedback to provide extra help for the user understanding where strokes might appear. Selecting the “Paint Mode” button in the lower left allows the user to select between these two possible paint modes.

Turning on “Paint with Helpers” mode shows a translucent rectangle positioned at the painting distance behind the screen, representing the plane that paint will be drawn on. The image below has some dark blue paint in the world near the user.

Any paint behind the drawing plane is rendered in a transparent style behind the rectangle; any paint in front of the plane occludes the rectangle. Looking around with the phone or tablet causes the rectangle to slice through the painting, helping the user anticipate where paint will end up.

We considered, but didn't implement, other paint modes (some of which you might be thinking of right now), such as “Paint on World” where strokes would be projected only on the surfaces detected by ARKit. Or perhaps “Paint on a Virtual Prop”, where a 3D scene is placed in the world, painted on, and then removed.

What’s Next for WebXR?

In this post, I introduced the idea of having a single WebXR web app be responsive to both AR and VR, using A-Painter XR as an example. I walked through the AR UI we created for A-Painter XR, emphasizing how the different context of “touch screen video-mixed AR” required a very different UI, but that much of the application code and logic remained the same.

While many WebXR apps might be best suited to either AR or VR, immersive or touch-screen, the great diversity of devices people will use to experience web content makes it essential that future WebXR app developers seriously consider supporting both AR and VR whenever possible. The true power of the web is its openness; we've come to expect that information and applications are instantly available to everyone regardless of the platform they choose to use, and keeping this true in the age of AR and VR will in turn keep the web open, alive and healthy.

We’d love to hear what you think about this, reach out to us at @mozillareality on twitter!

(Thanks to Trevor Smith (@trevorfsmith), Aurturo Paracuellos (@arturitu) and Fernando Serrano (@fernandojsg) for their work on A-Painter XR, the webxr polyfill, three.xr.js, and aframe-xr. Thanks Trevor and Roberto Garrido (@che1404) for their work on the WebXR Viewer iOS app. Arturo also created the A-Painter XR video.)