Food Blogs and the Future of MR on the Web

Last year, I posted some musings on the question "What is an MR Blog?". Perhaps this question would be better phrased as "what will the equivalent of the common blog be for MR content?", since "blog" is really just a placeholder for "non-trivial collection of content that lots of people can create and share".

While web-based MR will be used for similar applications and experiences as native MR (games, for example), the exciting thing about the web is the shear volume and variety of small bits of content, from opinions to stories to art to commentary to news, on self-hosted sites or places like Wordpress or Tumblr.

I've been throwing this question at people over the past month or so, and have started to think of the problem in a different way. I suspect these new media forms will emerge organically, but to do so, it has to be possible for people to easily create and explore ideas for MR experiences. Which really makes this an end-to-end problem: what makes blogging possible is that the content elements people put in blogs (text, pictures, videos) are easy to create, edit and mix together. The creative challenge is in the ideas and the creation and curation of individual elements, and the bar for creating something passable is very low: professional quality sites, with beautiful photographs and prose, can take a lot of time to create, but passable pictures and text can be created quickly by anyone.

So, asking "what is the MR equivalent to a blog?" is intimately tied to the question "what kind of 3D content can we create, edit and mix together, easily and quickly?" Answering this question is essential to even considering "what kinds of compelling experiences can we create with that content?"

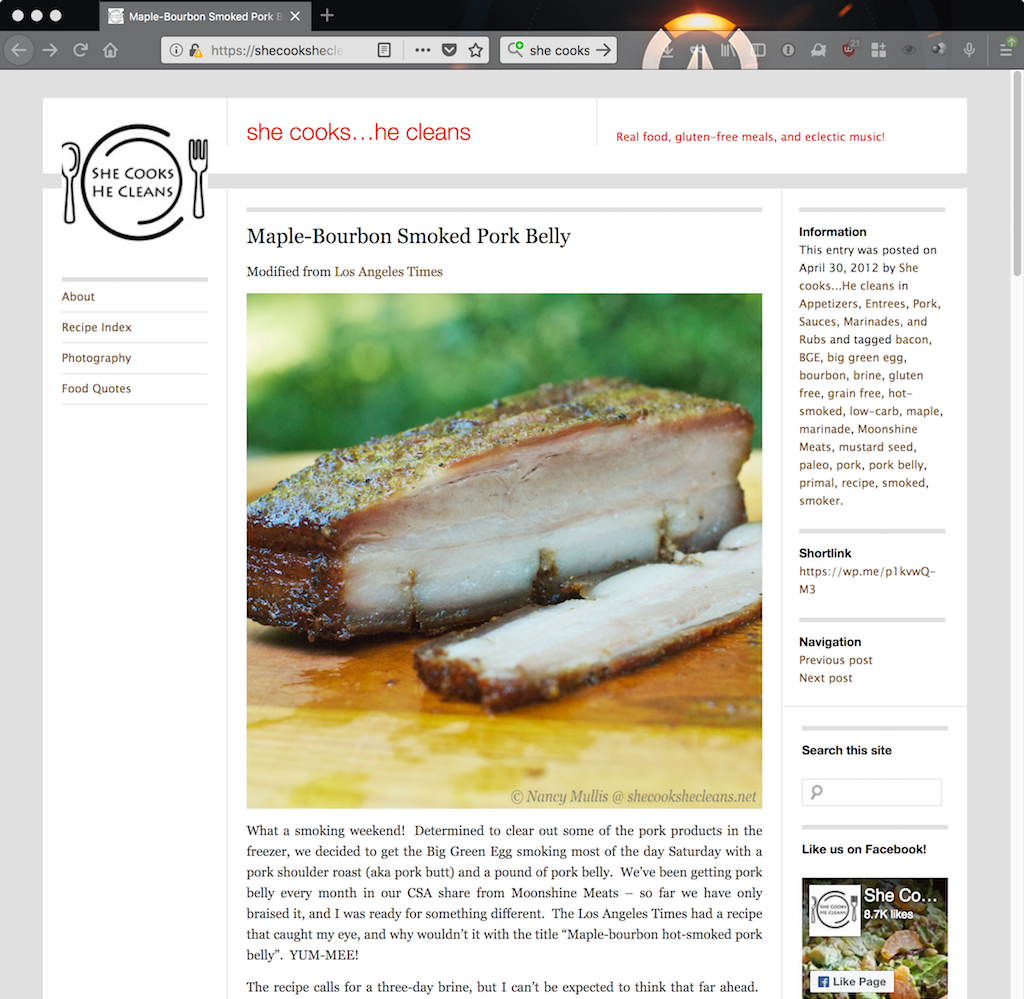

One example that crystalized this for me was food blogs. Over the holidays, I found myself looking up recipes on the web, and running across a lot of interesting food blogs were more than just traditional collection-of-recipe sites like allrecipes.com, such as David Lebovitz's blog.

Food blogs often mix recipes and stories, more blog or magazine than recipe book. They can get quite detailed, too, and usually include lots images and videos.

When you think about what it takes to create one of these posts, the steps are those mentioned above: gather images for the food and/or the cooking process, write the text, and lay out the story using whatever tools your blog system provides[1]. This might require HMTL and CSS editing, or (more likely) higher level tools like Wordpress's editor or (as I use on this blog) Liquid and Markdown tags that are processed by Jekyll.

What would the analogous steps be to add MR content to stories like this? Cooking is one of the most commonly cited examples of how AR content might be useful to consumers: a cook's hands are busy (and often dirty), they are moving around the space (away from a small single screen like a phone or laptop), they are doing many things at once, and often need to refer to instructions while they are doing the task. So, what would be required to take the pasta receipe above, and have some sort of "responsive MR web template" be able to display the content on an AR head worn display?

Let's ignore navigation and interaction: how the cook "pages through the steps" will be tied to the input capabilities of the device they are using, and might include gestures and voice. Lets also ignore any sort of magical AI that lets us recognize what the user has or hasn't done, know that ingredients need to be choped "over there", what part of the receipe each of the pots on the stove correspond to, or how to talk to the internet-connected appliances and relate them to the bits of the instructions.

Just consider the simplest case, analogous to the pasta recipe above: the author wants to show the cook a series of steps, illustrated with visuals to help them see the steps and understand what the author considers are the essential parts of how each might work.

The first challenge is layout. For that, an MR-capable design template might provide relatively simple, predictable support for authors, without tackling sophisticated layout problems. For example, imagine an author being able to specify "I need 3 places near the user: a fixed location for some descriptive text, a fixed location for visual elements, and a floating location that stays near the user for reminders and status".

The page generated by this template might then ask the user up front "Point to a vertical surface where you want the text to appear; now point to a horizontal surface where you would like the visual instructions to be placed"[2]. Such a template might also allow the author to tag areas of content in their story as belonging to one of these areas (including possibly having different bits of content for 2D and MR presentation, just as some responsive sites do now for phone and desktop presentation).

While vague, this feels tractable. But it begs the question, "What are the content elements, especially the visual elements?" Surely such an author wouldn't just want to place images, text and video in the 3D world.

The second challenge, then, is content creation. One of the immediate appeals of MR is the possibility to integrate virtual 3D content into the user's view of the physical world, letting people view things from different sides, and possibly give them new or better insights into something.

What if it was just as easy to create a 3D model of food and ingredients at each step in a recipe, just as easy as taking and editing pictures? Consider this 3D model of a Turkish Baklava created by Kabaq Augmented Reality Food and hosted on Sketchfab:

While still difficult, capturing realistic models of real objects is getting easier. This is different than the work being done to make editing 3D models easier, such as Google's Blocks in-situ 3D editor. For our purposes here, these manual editing approaches completely miss the mark. No matter how "easy" (relative to professional tools like Maya or 3D Studio), manual editing cannot be fast enough, or capable of creating 3D versions of food that is on par with a photograph.

This kind of experience needs a 3D equivalent to the photograph: something that attempts to capture what a part of the real world really looks like, that can be displayed in 3D. The approach used to create the baklava above is called photogrammetry. It involves collecting many images of an object and extracting the 3D structure and material properties from them. Various software solutions have been developed over the years, but have traditionally been difficult and time consuming to use, especially if you want a polished model without unsightly artifacts or holes. New solutions are popping up that hope to simplify the process, including a relatively cheap, turnkey solution shown by HP at CES this year (the Z 3D Camera). Approaches like this are promising, and point the way toward rapid creation of simple MR experiences.

But why stop at pictures and static models? While more complicated, more sophisticated experiences might want to include 3D equivalents to video: imagine seeing the hands of a chef kneeding the dough above, on the counter beside where you are working? Creating, storing, and rendering 3D video is a much more challenging task than creating a single 3D model, but is also getting closer to reality. Here's a video clip of the current web page for one project trying to solve this problem:

While this solution is not accessible to average consumers, it demonstrates that it is feasible, and points the way toward possible solutions.

This challenge of capturing, editing and sharing static or moving parts of the world, as easily as amateur's can currently create and sharing pictures and videos, is going to be a key hurdle that must be crossed if non-professionals are going to create and share MR content. There will be other elements needed to support a broad spectrum of content and experiences, of course: easily animated avatars (to act as stand-in "actors" in stories), more natural ways of controlling how content is laid out in a space (in contrast to the simple "three places" example above), and social services for creating shared experiences, to name a few.

Whatever these bits of content and underlying services are, the tools presented to end users will need to be simple and accessible if MR on the Web is going to achieve the kind of broad and varied use, the long tail of content, that we have come to know and love.

I agree that photogrammetry/easy ways of creating 3D content will be one of the keys of the future of web based MR.

For example Sony has a promising device XZ1 which allows you capture real objects and to port to web in a very simple way https://youtu.be/lOVKgOAlCd...

I think with this kind of tools, and if we develop easy to use 3D editors to be able to create/remix 3D content with touchscreen devices for example, along with well designed interactive embed containers that are easy to integrate into html pages, and useful from browser desktop, through touchscreen devices, to VR and AR supported devices, we could make a great ecosystem to have awesome MR experiences accesible for a huge audience.

Nice; it'll be great when there are more examples of this sort of thing!