MrEd, an Experiment in Mixed Reality Editing

(originally published on the Mozilla MR Blog, June 2019, by Josh Marinacci. This summarizes a project Josh, Anselm Hook, and I did. Just in case Mozilla shuts down the blog after shutting down the team, I'm preserving these posts here.)

We are excited to tell you about our experimental Mixed Reality editor, an experiment we did in the spring to explore online editing in MR stories. What’s that? You haven’t heard of MrEd? Well please allow us to explain.

For the past several months Blair, Anselm and I have been working on a visual editor for WebXR called the Mixed Reality Editor, or MrEd. We started with this simple premise: non-programmers should be able to create interactive stories and experiences in Mixed Reality without having to embrace the complexity of game engines and other general purpose tools. We are not the first people to tackle this challenge; from visual programming tools to simplified authoring environments, researchers and hobbyists have grappled with this problem for decades.

Looking beyond Mixed Reality, there have been notable successes in other media. In the late 1980s Apple created a ground breaking tool for the Macintosh called Hypercard. It let people visually build applications at a time when programming the Mac required Pascal or assembly. It did this by using the concrete metaphor of a stack of cards. Anything could be turned into a button that would jump the user to another card. Within this simple framework people were able to create eBooks, simple games, art, and other interactive applications. Hypercard’s reliance on declaring possibly large numbers of “visual moments” (cards) and using simple “programming” to move between them is one of the inspirations for MrEd.

We also took inspiration from Twine, a web-based tool for building interactive hypertext novels. In Twine, each moment in the story (seen on the screen) is defined as a passage in the editor as a mix of HTML content and very simple programming expressions executed when a passage is displayed, or when the reader follows a link. Like Hypercard, the author directly builds what the user sees, annotating it with small bits of code to manage the state of the story.

No matter what the medium — text, pictures, film, or MR — people want to tell stories. Mixed Reality needs tools to let people easily tell stories by focusing on the story, not by writing a simulation. It needs content focused tools for authors, not programmers. This is what MrEd tries to be.

Scenes Linked Together

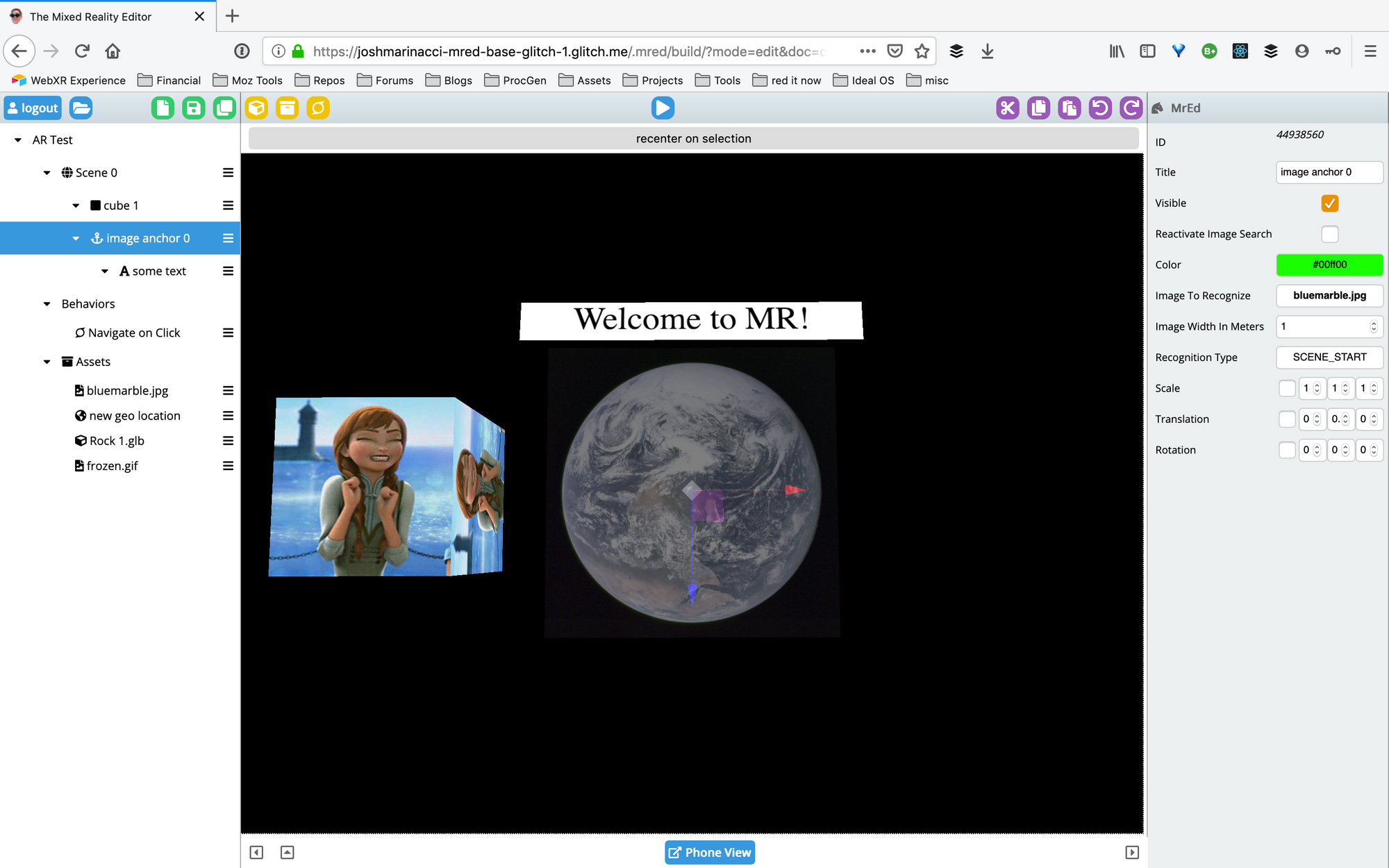

At first glance, MrEd looks a lot like other 3D editors, such as Unity3D or Amazon Sumerian. There is a scene graph on the left, letting authors create scenes, add anchors and attach content elements under them. Select an item in the graph or in the 3D windows, and a property pane appears on the right. Scripts can be attached to objects. And so on. You can position your objects in absolute space (good for VR) or relative to other objects using anchors. An anchor lets you do something like look for this poster in the real world, then position this text next to it, or look for this GPS location and put this model on it. Anchors aren’t limited to basic can also express more semantically meaningful concepts like find the floor and put this on it (we’ll dig into this in another article).

Dig into the scene graph on the left, and differences appear. Instead of editing a single world or game level, MrEd uses the metaphor of a series of scenes (inspired by Twine’s passages and Hypercard’s cards). All scenes in the project are listed, with each scene defining what you see at any given point: shapes, 3D models, images, 2D text and sounds. You can add interactivity by attaching behaviors to objects for things like ‘click to navigate’ and ‘spin around’. The story advances by moving from scene to scene; code to keep track of story state is typically executed on these scene transitions, like Hypercard and Twine. Where most 3D editors force users to build simulations for their experiences, MrEd lets authors create stores that feel more like “3D flip-books”. Within a scene, the individual elements can be animated, move around, and react to the user (via scripts), but the story progresses by moving from scene to scene. While it is possible to create complex individual scenes that begin to feel like a Unity scene, simple stories can be told through sequences of simple scenes.

We built MrEd on Glitch.com, a free web-based code editing and hosting service. With a little hacking we were able to put an entire IDE and document server into a glitch. This means anyone can share and remix their creations with others.

One key feature of MrEd is that it is built on top of a CRDT data structure to enable editing the same project on multiple devices simultaneously. This feature is critical for Mixed Reality tools because you are often switching between devices during development; the networked CRDT underpinnings also mean that logging messages from any device appear in any open editor console viewing that project, simplifying distributed development. We will tell you more details about the CRDT and Glitch in future posts.

We ran a two week class with a group of younger students in Atlanta using MrEd. The students were very interested in telling stories about their school, situating content in space around the buildings, and often using memes and ideas that were popular for them. We collected feedback on features, bugs and improvements and learned a lot from how the students wanted to use our tool.

Lessons Learned

As I said, this was an experiment, and no experiment is complete without reporting on what we learned. So what did we learn? A lot! And we are going to share it with you over the next couple of blog posts.

First we learned that idea of building a 3D story from a sequence of simple scenes worked for novice MR authors: direct manipulation with concrete metaphors, navigation between scenes as a way of telling stories, and the ability to easily import images and media from other places. The students were able to figure it out. Even more complex AR concepts like image targets and geospatial anchors were understandable when turned into concrete objects.

MrEd’s behaviors scripts are each a separate Javascript file and MrEd generates the property sheet from the definition of the behavior in the file, much like Unity’s behaviors. Compartmentalizing them in separate files means they are easy to update and share, and (like Unity) simple scripts are a great way to add interactivity without requiring complex coding. We leveraged Javascript’s runtime code parsing and execution to support scripts with simple code snippets as parameters (e.g., when the user finds a clue by getting close to it, a proximity behavior can set a global state flag to true, without requiring a new script to be written), while still giving authors the option to drop down to Javascript when necessary.

Second, we learned a lot about building such a tool. We really pushed Glitch to the limit, including using undocumented APIs, to create an IDE and doc server that is entirely remixable. We also built a custom CRDT to enable shared editing. Being able to jump back and forth between a full 2d browser with a keyboard and then XR Viewer running on an ARKit enabled iPhone is really powerful. The CRDT implementation makes this type of real time shared editing possible.

Why we are done

MrEd was an experiment in whether XR metaphors can map cleanly to a Hypercard-like visual tool. We are very happy to report that the answer is yes. Now that our experiment is over we are releasing it as open source, and have designed it to run in perpetuity on Glitch. While we plan to do some code updates for bug fixes and supporting the final WebXR 1.0 spec, we have no current plans to add new features.

Building a community around a new platform is difficult and takes a long time. We realized that our charter isn’t to create platforms and communities. Our charter is to help more people make Mixed Reality experiences on the web. It would be far better for us to help existing platforms add WebXR than for us to build a new community around a new tool.

Of course the source is open and freely usable on Github. And of course anyone can continue to use it on Glitch, or host their own copy. Open projects never truly end, but our work on it is complete. We will continue to do updates as the WebXR spec approaches 1.0, but there won’t be any major changes.

Next Steps

We are going to polish up the UI and fix some remaining bugs. MrEd will remain fully usable on Glitch, and hackable on GitHub. We also want to pull some of the more useful chunks into separate components, such as the editing framework and the CRDT implementation. And most importantly, we are going to document everything we learned over the next few weeks in a series of blogs.

If you are interested in integrating WebXR into your own rapid prototyping / educational programming platform, then please let us know. We are very happy to help you.

You can try MrEd live by remixing the Glitch and setting a password in the .env file. You can get the source from the main MrEd github repo, and the source for the glitch from the base glitch repo.