Updating the WebXR Viewer for iOS 12 / ARKit 2.0

(originally published on the Mozilla MR Blog, November 2018. Just in case Mozilla shuts down the blog after shutting down the team, I'm preserving the posts I wrote for the blog.)

Over the past few months, we're continuing to leverage the features of ARKit on iOS to enhance the WebXR Viewer app and explore ideas and issues with WebXR. One big question with WebXR on modern AR and VR platforms is how to best leverage the platform to provide a frictionless experience while also supporting the advanced capabilities users will expect, in a safe and platform independent way.

Last year, we created an experimental version of an API for WebXR and built the WebXR Viewer iOS app to allow ourselves and others to experiment with WebXR on iOS using ARKit. In the near future, the WebXR Device API will be finalized and implementations will be begin to appear; we're already working on a new Javascript library that allows the WebXR Viewer to expose the new API, and expect to ship an update with the official API shortly after the webxr polyfill is updated to match the final API.

We recently released an update to the WebXR Viewer that that fixes some small bugs and updates the app to iOS 12 and ARKit 2.0 (we haven't exposed all of ARKit 2.0 yet, but expect to over the next coming months). Beyond just bug fixes, two features of the new app highlight interesting questions for WebXR related to privacy, friction and platform independence.

First, Web browsers can decrease friction for users moving from one AR experience to another by managing the underlying platform efficiently and not shutting it down completely between sessions, but care needs to be taken to not to expose data to applications that might surprise users.

Second, some advanced features imagined for WebXR are not (yet) available in a cross platform way, such as shareable world maps or persistent anchors. These capabilities are core to experiences users will expect, such as persistent content in the world or shared experiences between multiple co-located people.

In both cases, it is unclear what the right answer is.

Frictionless Experience and User Privacy

Hypothesis: regardless of how the underlying platform is used, when a new WebXR web page is loaded, it should only get information about the world that that would be available if it was loaded for the first time and not see existing maps or anchors from previous pages.

Consider the image (and video) below. The image shows the results of running the "World Knowledge" sample, and spending a few minutes walking from the second floor of a house, down the stairs to the main floor, around and down the stairs to the basement, and then back up and out the front door into the yard. Looking back at the house, you can see small planes for each stair, the floor and some parts of the walls (they are the translucent green polygons). Even after just a few minutes of running ARKit, a surprising amount of information can be exposed about the interior of a space.

If the same user visits another web page, the browser could choose to restart ARKit or not. Restarting results in a high-friction user experience: all knowledge of the world is lost, requiring the user to scan their environment to reinitialize the underlying platform. Not restarting, however, might expose information to the new web page that is surprising to the user. Since the page is visited while outside the house, a user might not expect is to have access to details of the interior.

In the WebXR Viewer, we do not reinitialize ARKit for each page. We made the decision that if a page is reloaded without visiting a different XR page, we leave ARKit running and all world knowledge is retained. This allows pages to be reloaded without completely restarting the experience. When a new WebXR page is visited, we keep ARKit running, but destroy all ARKit anchors and world knowledge (i.e., ARKit ARAnchors, such as ARPlaneAnchors) that are further than some threshold distance from the user (3 meters, by default, in our current implementation).

In the video below, we demonstrate this behavior. When the user changes from "World Knowledge" sample to the "Hit Test" sample, internally we destroy most of the anchors. When the user changes back to the "World Knowledge" sample, we again destroy most of the anchors. You can see at the end of the video that only the nearby planes still exist (the plane under the user and some of the planes on the front porch). Further planes (inside the house, in the case) are gone. (Visiting non-XR pages does not count as visiting another page, although we also shut down ARKit after a short time, to save battery, if the browser is not on an XR page, which destroys all world knowledge as well).

While this is a relatively simplistic approach to this tradeoff between friction and privacy, issues like these need to be considered when implementing WebXR inside a browser. Modern AR and VR platforms (such as Microsoft's Hololens or Magic Leap's ML1) are capable of synthesizing and exposing highly detailed maps of the environment, and retaining significant information over time. In these platforms, the world space model is retained over time and exposed to apps, so even if the browser restarts the underlying API for each visited page, the full model of the space is available unless the browser makes an explicit choice to not expose it to the web page.

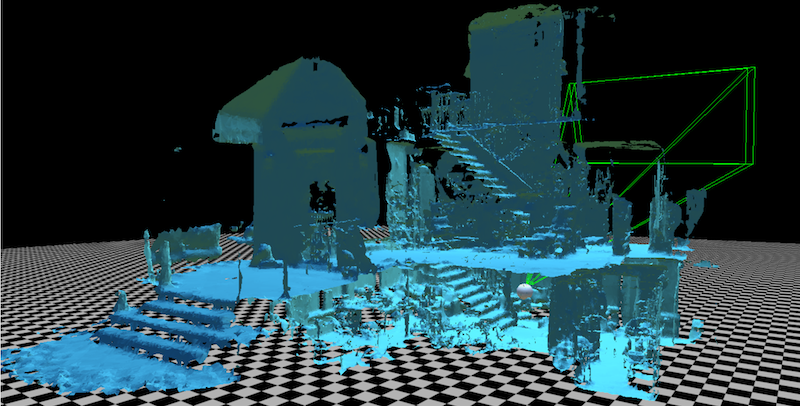

Consider, for example, a user walking a similar path for a similarly short time in the above house while wearing a Microsoft Hololens. In this case, a map of the same environment is shown below.

This image (captured with Microsoft's debugging tools, while the user is sitting at a computer in the basement of the house, shown as the sphere and green view volume) is significantly more detailed that the ARKit planes. And it would be retained, improved and shared with all apps in this space as the user continues to wear and use the Hololens.

In both cases, the ARKit planes and Hololens maps were captured based on just a few minutes of walking in this house. Imagine the level of detail that might be available after extended use.

Platform-specific Capabilities

Hypothesis: advanced capabilities such as World Mapping, that are needed for user experiences that require persistence and sharing content, will need cross-platform analogs to the platform silos currently available if the platform-independent character of the web is to extend to AR and VR.

ARKit 2.0 introduces the possibility of retrieving the current model of the world (the so-called ARWorldMap used by ARKit for tracking planes and anchors in the world. The map can then be saved and/or shared with others, enabling both persistent and multi-user AR experiences.

In this version of the WebXR Viewer, we want to explore some ideas for persistent and shared experiences, so we added session.getWorldMap() and session.setWorldMap(map) commands to an active AR session (these can be seen in the "Persistence" sample, a small change to the "World Knowledge" sample above).

These capabilities raise questions of user-privacy. The ARKit's ARWorldMap is an opaque binary ARKit data struction, and may contain a surprising amount of data about the space that could extracted by determined application developers (the format is undocumented). Because of this, we leverage the existing privacy settings in the WebXR Viewer, and allow apps to retrieve the world map if (and only if) the user has given the page access to "world knowledge".

On the other hand, the WebXR Viewer allows a page to provide an ARWorldMap to ARKit and try and use it for relocalization with no heightened permissions. In theory, such an action could allow a malicious web app to try and "probe" the world by having the browser test if the user is in a certain location. In practice, such an attack seems infeasible: loading a map resets ARKit (a highly disruptive and visible action) and relocalizing the phone against a map takes an indeterminate amount of time regardless of whether the relocalization eventually succeeds or not.

While implementing these commands was trivial, exposing this capability raises a fundamental question for the design of WebXR (beyond questions of permissions and possible threats). Specifically, how might such capabilities eventually work in a cross-platform way, given that each XR platform is implementing these capabilities differently?

We have no answer for this question. For example, some devices, such as Hololens, allow spaces to be saved and shared, much like ARKit. But other platforms opt to only share Anchors, or do not (yet) allow sharing at all. Over time, we hope some common ground might emerge. Google has implemented their ARCore Cloud Anchors on both ARKit and ARCore; perhaps a similar approach could be take that is more open and independent of one companies infrastructure, and could thus be standardized across many platforms.

Looking Forward

These issues are two of many issues that are being discussed and considered by the Immersive Web Community Group as we work on the initial WebXR Device API specification. If you want to see the full power of the various XR platforms exposed and available on the Web, done in a way that preserves the open, accessible and safe character of the Web, please join the discussion and help us ensure the success of the XR Web.